Why analyzing Marketing feels impossible

I’ve been both a marketing and product data scientist and if I could go back and start my career I would 1000 times over choose to be a product data scientist. Only those that are a bit masochistic would choose to be in Marketing Data Science.

And every so often, I hear a question that completely stumps me and it’s what prompted me to write this article: “Why don’t we understand how our Marketing is doing?” My usual response would be to just drop my jaw and ask if they were serious, but I realized that it’s a very valid question. WHY is Marketing data never as simple as it looks?

Here are 10 reasons we’ll cover today

Attribution

Seasonality

Data pipelines

Product errors

Channel biases

Algorithm updates

Campaign changes

Stakeholder opinions

Cross channel impact

Experimentation costs

Attribution

Just look at the image above and you’ll immediately know why attribution alone makes analyzing Marketing feel impossible. 6 different approaches. Attribution is the bane of all of our existence but it’s a necessary evil. Humans want certainty and attribution solves that but it adds an insane amount of complexity.

First, no one can agree on attribution but also everyone knows it’s wrong. Only in Marketing Data Science do you set something up that you know is wrong but still use it because it’s better than having nothing. Absurdity. Taking it one step further though is that attribution rarely stays consistent. Many of you have probably experienced broken attribution or changes to attribution or some other catalyst to the marketing data that makes you revisit all of your assumptions.

Attribution makes analyzing Marketing feel impossible.

Seasonality

Seasonality is the world’s greatest excuse for an answer for 95% of questions. And the reason is because 95% of the time it’s true. From the hundreds of analyses I’ve done, seasonality has been the primary root cause for most answers. But seasonality doesn’t impact JUST the behavior of users. Oh no. It’s more than that.

If seasonality around Thanksgiving was just isolated to knowing shoppers will stop buying around early November to save up for the last week that would be one thing. But you also have to deal with the seasonality of Marketing itself.

Picture this Marketing scenario that you have to contend with:

Shoppers buy less around early November but also…

CPMs start to go throughout the month of November because…

Everyone in the world wants to advertise for Black Friday which means…

Your competitive landscape has seasonality too

So, not only do your customers have seasonality but so do your CPMs, your conversion rates, your competitive landscape, and most excitingly your CMO also has a seasonality to their mood. No one loves November CMO.

Seasonality makes analyzing Marketing feel impossible.

Data Pipelines

The product analytics pipeline is fairly simple. Track a bunch of events and ingest as 1st party data. I know I’m simplifying but let’s compare it to the complexity of the MarTech stack:

There are over 14,000 MarTech vendors for any piece of the Marketing Journey. Want a vendor that just sends good morning email to your users every day? There’s probably a vendor for that.

Now, having that many vendors sounds great BUT the problem is how little companies invest in Marketing vs Product. Look at the # of product engineers in your company vs marketing engineers. It’s probably an absurd ratio of like 5:1 . Is product really driving 5x the value especially when almost all of the variable cost of a company is under Marketing? No.

So, you bring in a bunch of engineers to solve a “small” problem of tracking 3rd party data across multiple websites to try and perfectly attribute and lo and behold your data pipelines suck. And to fix it? “Ah, we’ll try and do it on the 2026 q3 roadmap”. Cool cool cool.

Data pipelines makes analyzing Marketing feel impossible.

Product errors

Product and Marketing do not live on silos. While campaign changes (which we’ll discuss) are definitely a reason to move marketing the reality is that Marketing is there to amplify a good product. If your product sucks or breaks then there’s no amount of Marketing that can fix it. But, who is usually responsible for signups? Marketing.

So, product errors and the lack of visibility / communication with product is a huge reason why Marketing is challenging. There’s often no connection between the two programs and so when something like signups drop the first finger is towards Marketing but the reality is that it’s hard to break Marketing over the course of a day/week. It’s extremely easy to break product within a minute. Too many times I’ve had to spend all day digging in Meta or Google Ads only to realize that the landing page or app or link was broken.

Product errors make analyzing Marketing feel impossible.

Channel biases

Every marketing channel has it’s own unique biases. Everything from different demographics to different behaviors to different capabilities. Which means much of the data to analyze requires a heavy amount of context. If you’re analyzing the one channel that’s fine but imagine you’re a marketer working with the one analyst who helps you Paid Search + Paid Social + CRM. Good luck my friend. You have to know that paid search is going to be heavily last click biased and that Paid Social will “appear” expensive compared to paid search but has higher reach (not to mention it’s a push channel). Then for CRM you have to understand how to analyze open, click, and unsubscribe rates. These “biases” add up and they are so noisy that it becomes difficult to extract signal vs noise.

Let’s not even get started on offline channels. Those are in a world of their own and it’s an even hairier monster. These channels tend to be more “brand building” which makes measurement, education, and optimization incomparable.

Channel biases make analyzing Marketing feel impossible.

Algorithm updates

Let’s ignore the fact that this chart above is from 2015. If I didn’t tell you that, you could have speculated that this was from March of this year when Google rolled out a new core update. It could also be from LinkedIn after making it’s one thousandth feed algorithm update.

Every digital Marketer is beholden to some algorithm and just like seasonality it can explain 95% of drops that happen over night. Now that’s fine if you have to explain that in the next week’s business review, but these updates happen frequently and are poorly documented with unpredictable behavior. Then try remembering why that happened last year when you say a YoY chart.

The expectation of course is that you recapture where you were and so you have to make a bunch of updates and it sets you back weeks / months depending on your speed of execution.

Algorithm updates make analyzing Marketing feel impossible.

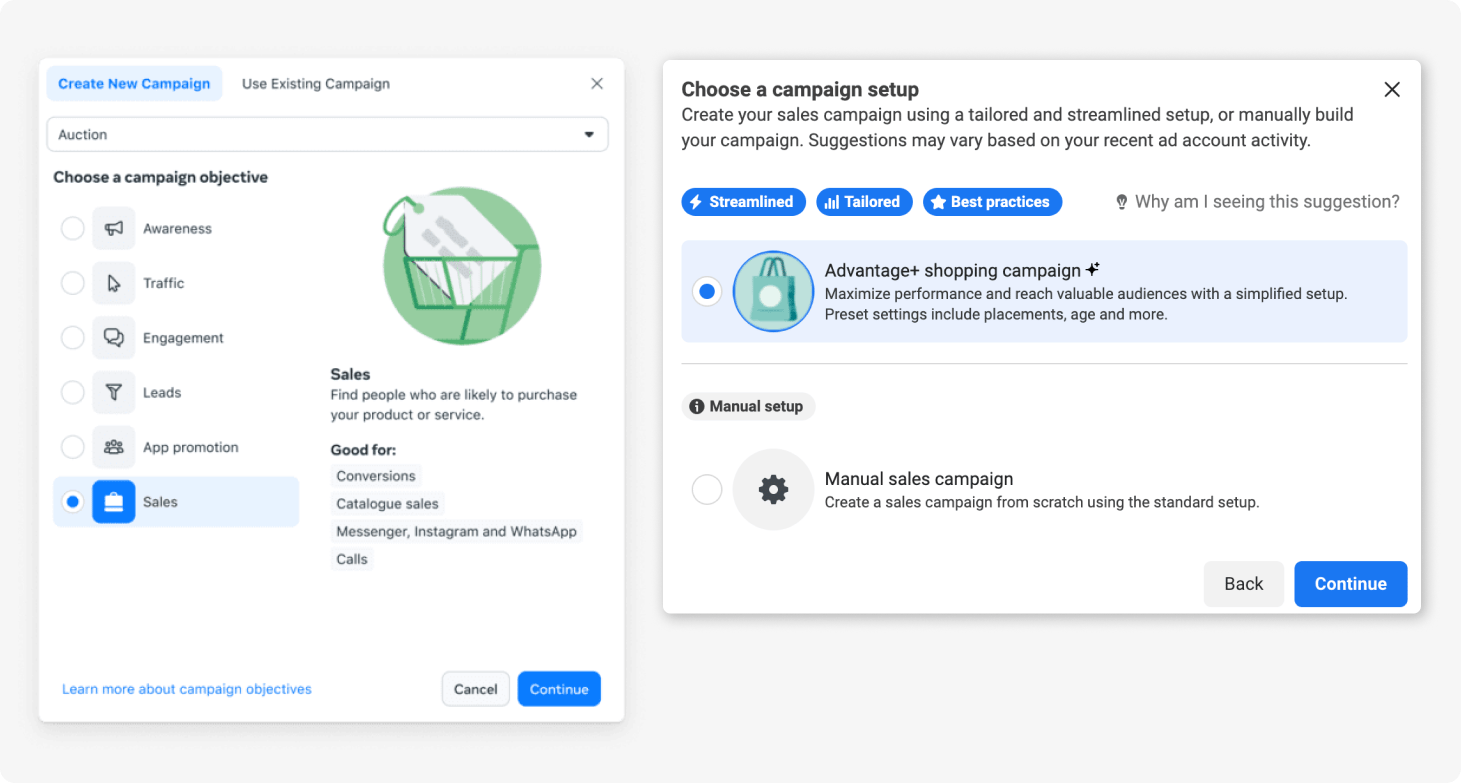

Campaign changes

This one might feel a bit like “Ugh I did some work and now I have to analyze that work” but hear me out.

At every stage of a company there’s an expectation that you should be able to change , update, and optimize campaigns quickly. Because of that, we’ve been programmed to just tweak things here and tweak things there.

The challenge with that is:

it’s not always clear what we tweaked

the impact isn’t always visible (re: data pipelines)

there’s a lot of guesswork as to what moved (re: seasonality)

These campaign changes are usually small in nature and while they might show up in platform they don’t always show up in the broader business data. This creates a dissonance between marketers who feel like they’re moving the needle (literally moving the needle by tweaking a ROAS target or something else) and the rest of the business who might not see it.

In addition, campaign changes if they’re big enough trigger algorithms to relearn which then puts analysis and measurement into a tailspin. If you go too small, you can’t see signal. If you go too big, you trigger relearning and can’t see signal. The joy!

Campaign changes make analyzing Marketing feel impossible.

The additional factor is you only have 4 weeks in a month and 52 weeks in a year so if you’re spending half of that time just waiting then your stakeholders aren’t going to be happy which brings me too…

Stakeholder opinions

Marketing is one of those disciplines where everyone is an expert. We’ve all experienced it.

“Ahh that creative isn’t good enough”

“What if we changed this copy”

“I want us to go faster”

“Be bolder”

And unsurprisingly that makes Marketing data a lot harder to analyze because the context behind is not the same.

“I wonder if it flopped because XYZ?”

“Didn’t ABC also do this at the same time?”

So when you’re presenting Marketing data you inevitably run into many experts on the topic and it makes marketing data harder to analyze.

Stakeholder opinions make analyzing Marketing feel impossible.

Cross channel impact

For most businesses, there is only one product. One place where things can happen. This makes isolating impact much easier. Contrast that with Marketing where there are often multiple channels. This cross channel impact makes it extremely challenging to isolate impact and therefore analyze the data.

Let’s revisit attribution. One week your Direct channel goes up 20%. You dig into attribution, your pipelines, etc and nothing is broken there. But you’re running multiple channels. What caused this to explode? Is it Channel A, B, C or some combination? In that moment, it becomes very difficult to assess impact.

While there are tools like MMM that can help with this, those are reserved for teams that have more budget, more sophistication, and more resources (the rich get richer). For the 99% of marketing teams that don’t have that, cross channel impact ads a lot of complexity. It’s why many marketers are channel experts and just see what’s happening in their own channels while Marketing leadership has a tougher time pointing to what drove that impact.

Cross channel impact makes analyzing Marketing feel impossible.

Experimentation costs

On a recent podcast, I was asked why Marketing experimentation isn’t as established as product experimentation. The reason is because in product you often test into something while in Marketing you test out of it.

In product, when you launch a new feature you A/B test it and introduce it above a baseline. Only if it meets certain thresholds and criteria is it introduced. In addition, the cost of experimentation is significantly lower. There’s a bit of eng costs and maybe platform costs if you’re using a vendor but that’s it.

Compare that with Marketing where most new channels, channels, and creatives are often launched. There’s not a robust system of experimentation that allows you to layer in Marketing (going back to many of the issues discussed before) and so the default is to launch Marketing and then test out of it. That’s what an incrementality test is . You’re often seeing the optimal level of spend. Well, why don’t you test the optimal level of spend first? Why don’t you see the impact of doubling spend first and then double it instead of the other way around? In addition , Marketing experimentation has an actual cost (the cost of the ad spent to test) which makes it more risky and harder to justify.

This all makes analyzing marketing harder because you have to back track to calculate the impact. For product experimentation, you know the perceived impact before the launch of the feature. Marketing is often the opposite way and so analyzing data becomes painful. Finally, there’s a psychological impact of “loss aversion” where people are scared that if they turn off marketing that they’ll lose growth. Fair, but that’s the whole point…

“I love experimenting!”

“Okay, but you might not get the results you want…”

“I don’t want to experiment!”

Experimentation costs make analyzing Marketing feel impossible.

Wrapping up

Everyone wants to understand how marketing is doing but few get why it's a hard question to answer. Now, you can point to 10 reasons why it is:

Attribution

Seasonality

Data pipelines

Product errors

Channel biases

Algorithm updates

Campaign changes

Stakeholder opinions

Cross channel impact

Experimentation costs

So if your CEO asks “Why don’t we understand how our Marketing is doing?” you can send them this article and remind these aren’t excuses. These are just the challenges we have to overcome.

Enjoyed reading this?

Share with your colleagues or on your LinkedIn . It helps the newsletter tremendously and is much appreciated!

Missed my last article?

Here it is: How Marketers can use AI